Why I do not have a strongly bimodal prediction of the distribution of future value

The standard (i.e. extremely simplified) LessWrong (henceforth "rationalists") story of the future goes as follows: sometime in the relatively near future an incredibly powerful AI is going to be created. If we have not solved "the alignment problem" and applied the solution to that AI, then all humans immediately die. If we have solved it and applied it, then ~utopia.

From this, rationalists derive two important numerical values which serve as the best 2-dimensional approximation of one's forecast of the future:I know that e.g. Yudkowsky speaks of the dislike of the use of p(doom), but note again that my construction here is not what I think any specific rationalist "thought leader" necessarily thinks, but rather about the memetic residue of rationalists., the probability of the first scenario above, and "timelines", the amount of time until that AI, often called "AGI".

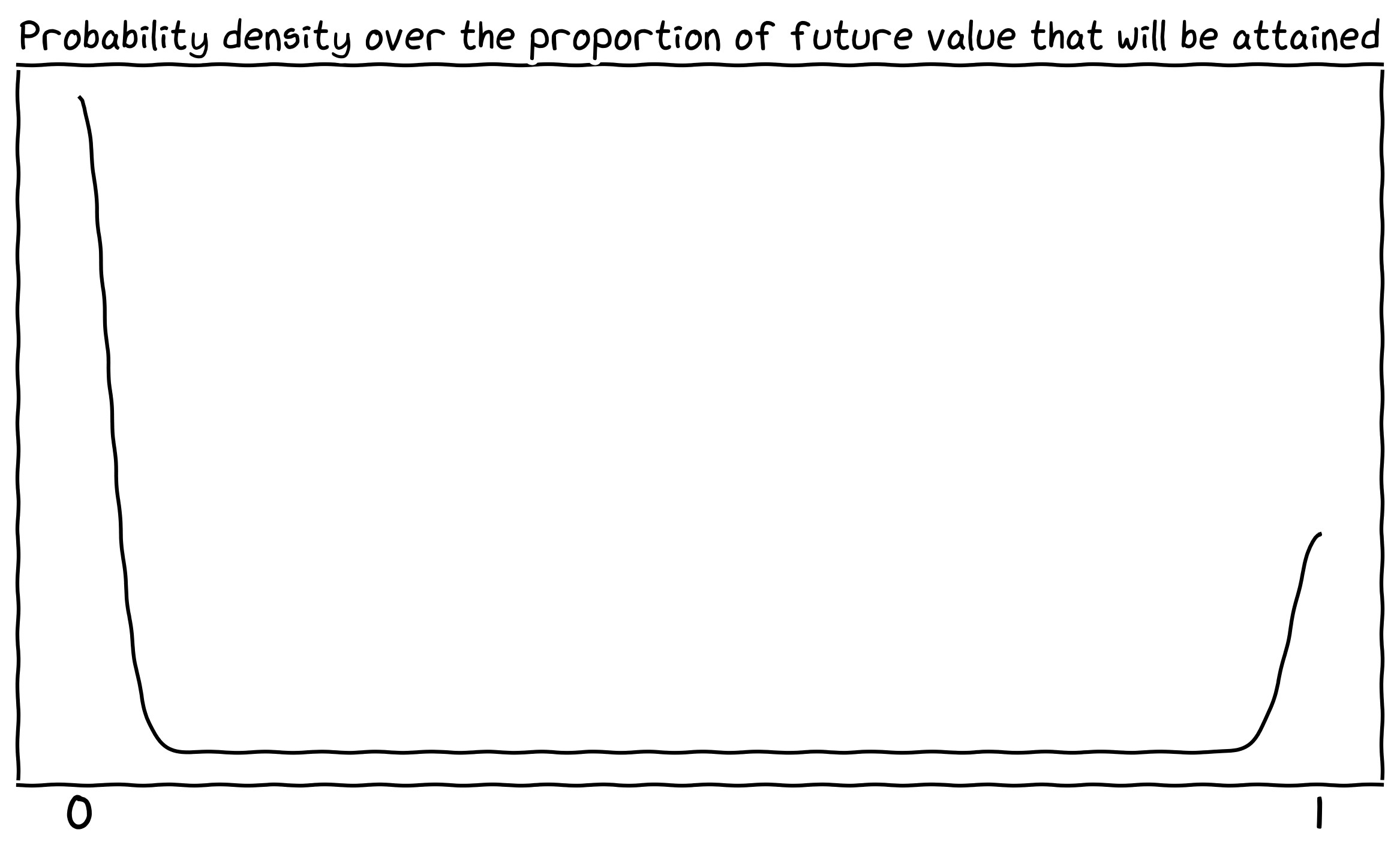

Mapping the standard rationalist story onto the distribution of the future value (with possible futures weighted by their probability), we obtain a strongly bimodal distribution, with peaks at ~0% value and ~100% value. Probability density being something like this:

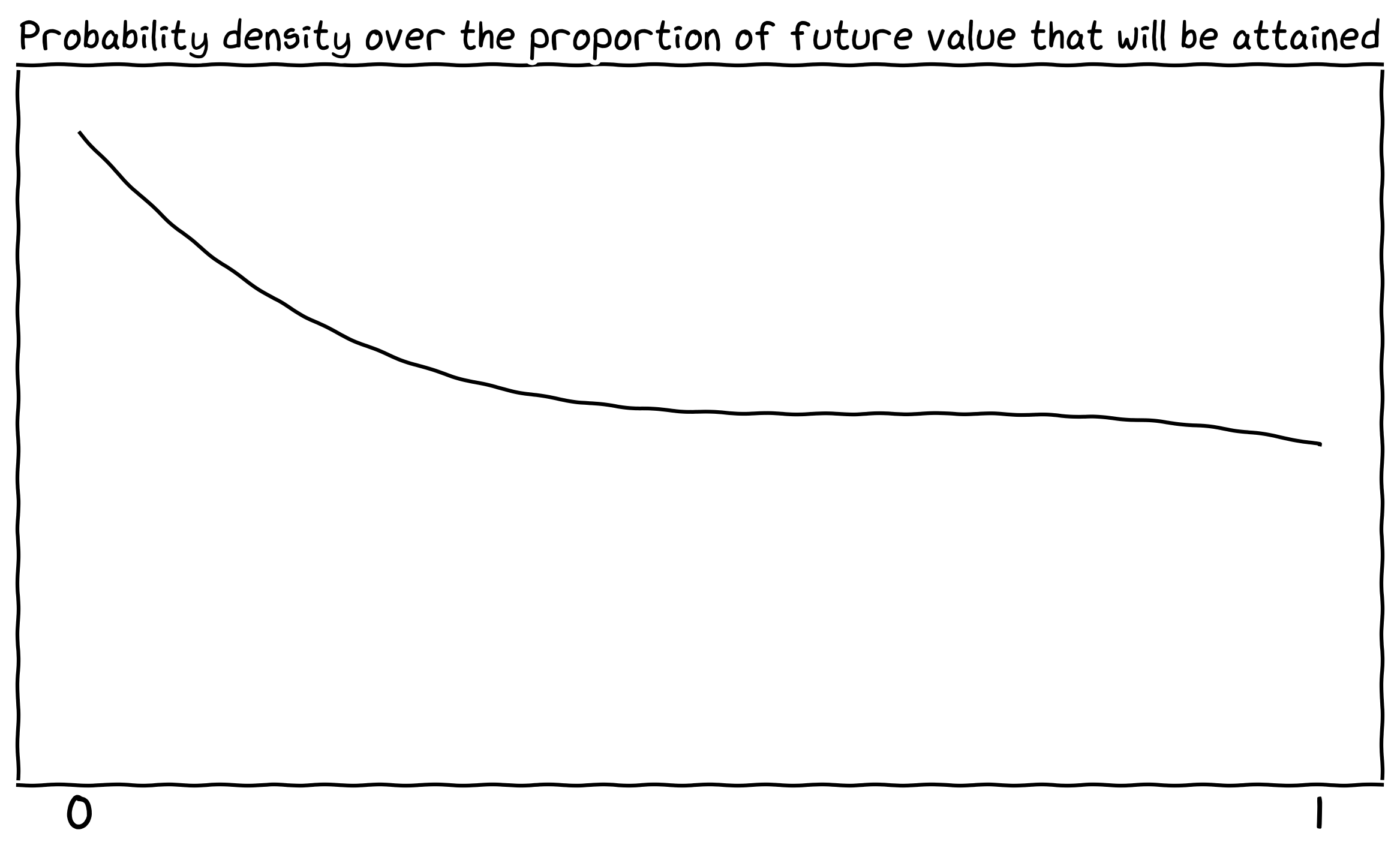

Whereas the (very rough) shape of my personal distribution is something like this:

Why the difference? Broadly speaking, I expect the future to look more like it does now - a compromise between many different stakeholders, including individual humans. Quick and dirty list:

- I put more probability on a much more gradual ramp-up to the kind of superintelligence which could permanently disempower humanity. In particular, I strongly suspect that by the time that we have a superintelligent AI which can develop useful nanotechnology, our world will already be fundamentally changed by previous (weaker) AIs, in a way that (in expectation) makes us more resilient.

- It seems to me that intelligence is much more jagged than the rationalist view accounts for, and that one can have superhuman AI mathematicians and scientists which are not otherwise superhuman.

- I see it much more likely that alignment is relatively easy, but I find it somewhat unlikely that humanity pursues incredibly metaethically ambitious goals such as coherent extrapolated volition.

- I think we will find it much more easy to trade with AIs than is often supposed.

Given those differences, what does my view suggest we ought to do? Another quick and dirty one:

- It is important that AIs are producing beauty, not just correctness; that their outputs are diverse & creative.

- We should work on and normalize the use of non-sycophantic AI advisors. The modern world is too complicated for most of its populace - hence democracy fails - and it is likely to become even more complicated. AI advisors could significantly raise the sanity waterline of our civilization. (For these reasons we also need AI making progress in philosophy.)

- AI welfare should be a much, much greater priority. AI welfare is not only, should the AIs turn out to be moral patients, a morally good thing to do, but it also signals to future AIs our wish to cooperate and trade.